The Long View on AI

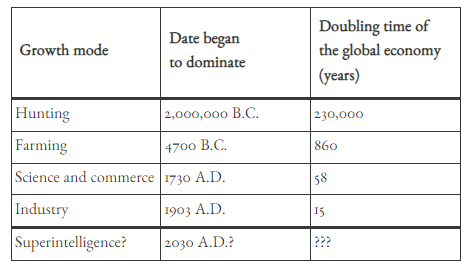

Discussions about AI and the future are improved when contextualized with the most important graph of all time:

This graph has two major implications for AI. First, it is an existence proof for insane speed-ups in economic growth, tech progress and societal change.

From this perspective it would not be surprising for growth rates of the world economy to increase by another order of magnitude or more. It would merely be a continuation of the straight line of history (on a log-log plot).

It would not be crazy to double average lifespan, or to create heretofore unimaginable forms of construction, destruction, expression, or consumption. Compressing the previous century of technological progress into a decade is just about what we should expect from simple extrapolation.

This perspective is something that EAs and rationalists understand well, and it (among other things) motivates high P(doom)s. The 20th century brought incredible progress but also terrible risk. The world wars, the rise of authoritarianism, and of course nuclear weapons. Doubling all of this and compressing into a decade is almost impossible to imagine, despite how likely it seems. Leopold Aschenbrenner’s recent piece is best understood as taking these extrapolations seriously and predicting the consequences.

The second implication of this extrapolation of history is less well understood: things will probably feel normal the whole time. Or at least, our inability to imagine how a future that moves so fast could be a comfortable home for anyone is insufficient to prove that it won’t feel normal.

We are all living at the tippy top of the hyperbolic growth curve in the fastest changing world ever. 3% global growth rates are 100x faster than they were 1000 years ago. Each country and each person has access to so much more power, for both creation and destruction. What could a single squadron of modern soldiers do to the Roman Empire? And our word has millions of such squadrons. No one from 300 years ago could have imagined a world that changes so fast but does not collapse in on itself. Where each person holds so much power and faces so much danger and yet feels like they are living a normal life. But that’s what we all do.

The point here is not to wave away the possible dangers or to claim everything will work out automatically. Instead, the point is that rapid, unforeseen adaptation to change is also part of the historical record that we’re extrapolating forwards when we continue the lines on these graphs.

Anyone ruling out massive increases in growth rates and other incredible tech changes due to AI is confident in the face of thousands of years of historical evidence to the contrary. However, sketching out visions of this rapid change and asking, rhetorically, “how could society ever hope to deal with this?” also falls short. The pace of change we are comfortable with would be incomprehensible to someone from 1600 and terrifying if they managed to understand it. To avoid this mistake, we should also extrapolate rapid adaptation to rapid change, even when it is difficult to imagine.

These two implications from the long view on AI are too general to support any particular conclusion about P(doom), AI capabilities, or the chance of geopolitical conflict. Instead, they provide a baseline scenario of rapid growth and rapid adaptation that can be edited with specific points about AI. Starting here would improve most AI debates.

I like this idea of a baseline scenario. If we grow gdp by 2% a year forever, that does end up with some pretty crazy results. But in some sense that is also “business as usual”.

Good post! I hadn’t thought about that second bit. I’m curious though, how likely do you think really high rates of growth are during the next decades? My impression from reading, e.g. https://www.openphilanthropy.org/research/could-advanced-ai-drive-explosive-economic-growth/, is that a naive extrapolation leads to very extreme results, and given that we have had constant rates of growth for the last century in developed countries, saying it’s very likely strike me as perhaps unreasonable. What are your thoughts?