Four Futures For Cognitive Labor

I just returned from Manifest, a bay area rationalist conference hosted by the prediction market platform, Manifold. The conference was great and I met lots of cool people!

A common topic of conversation was AI and its implications for the future. The standard pattern for these conversations is dueling estimates of P(doom), but I want to avoid this pattern. It seems difficult to change anyone’s mind this way and the P(doom) framing splits all futures into heaven or hell without much detail in-between.

Instead of P(doom), I want to focus on the probability that my skills become obsolete. Even in worlds where we are safe from apocalyptic consequences of AI, there will be tumultuous economic changes that might leave writers, researchers, programmers, or academics with much less (or more) income and influence than they had before.

Here are four relevant analogies which I use to model how cognitive labor might respond to AI progress.

First: the printing press. If you were an author in 1400, the largest value-add you brought was your handwriting. The labor and skill that went into copying made up the majority of the value of each book. Authors confronted with a future where their most valued skill is automated at thousands of times their speed and accuracy were probably terrified. Each book would be worth a tiny fraction of what they were worth before, surely not enough to support a career.

With hindsight we see that even as all the terrifying extrapolations of printing automation materialized, the income, influence, and number of authors soared. Even though each book cost thousands of times less to produce, the quantity of books demanded increased so much in response to their falling cost that it outstripped the productivity gains and required more authors, in addition to a 1000x increase in books per author, to fulfill it.

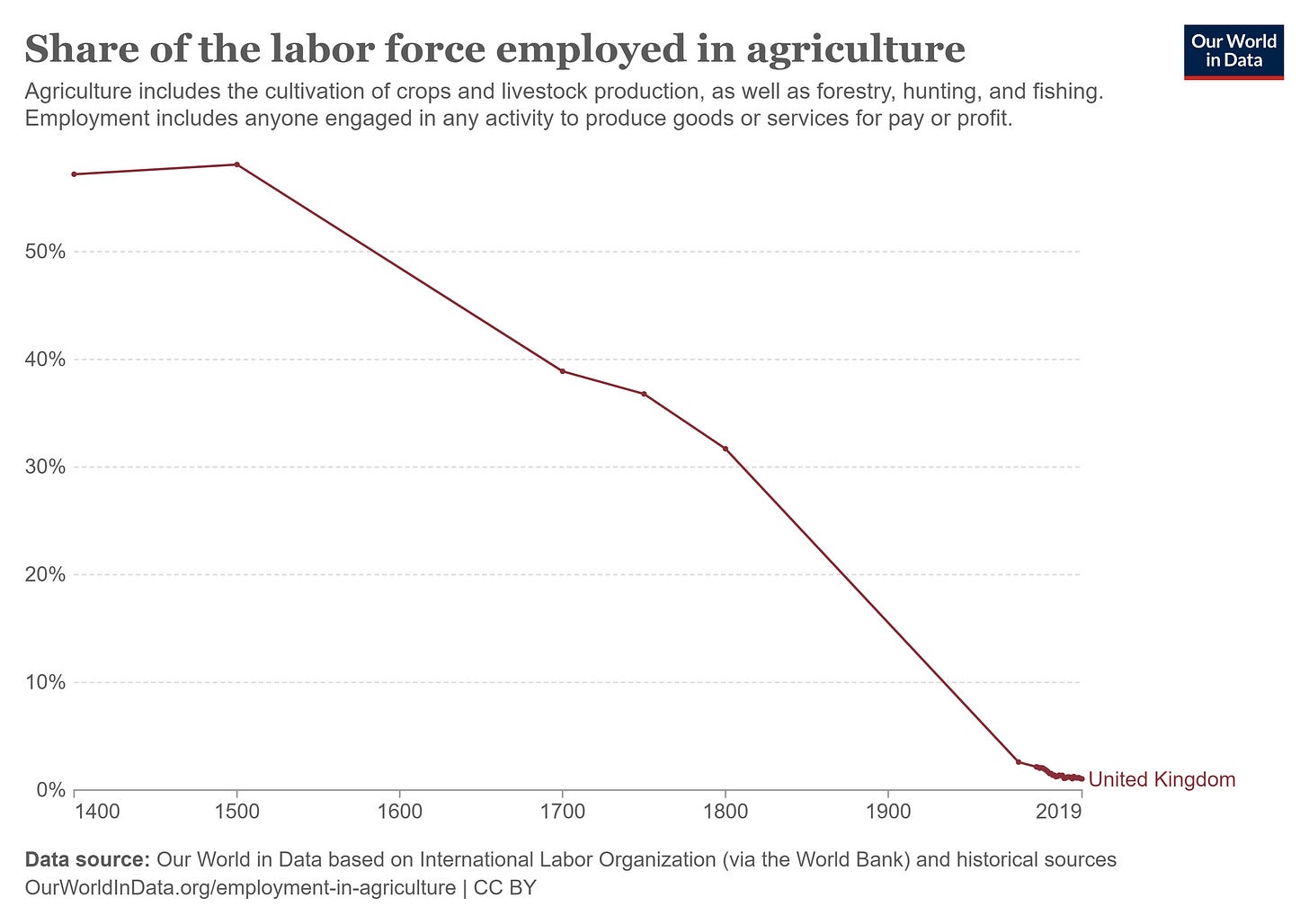

Second: the mechanization of farming. This is another example of massive productivity increases but with different outcomes.

Unlike authors and the printing press, the ratio of change in production per person and change total consumption was not low enough to grow the farming labor force or even sustain it. The per-capita incomes of farmers have doubled several times over but there are many fewer farmers, even in absolute numbers.

Third: computers. Specifically, the shift from the job title of computer to the name of the machine that replaced it. The skill of solving complicated numeric equations by hand was outright replaced. There was some intermediate period of productivity enhancement where the human computers or cashiers used calculators, but eventually these tools became so cheap and easy for anyone to use that a separate, specialized position for someone who just inputs and outputs numbers disappeared. This industry was replaced by a new industry of people who programmed the automation of the previous one. Software engineering now produces and employs many more resources, in shares and absolute numbers, than the industry of human computers did. The skills required and rewarded in this new industry are different, though there is some overlap in skills which helped some computers transition into programmers.

Finally: the ice trade. In the 19th and early 20th centuries, before small ice machines were common, harvesting and shipping ice around the world was a large industry employing hundreds of thousands of workers. In the early 19th century this meant sawing ice off of glaciers in Alaska or Switzerland and shipping it in insulated boats to large American cities and the Caribbean. Soon after the invention of electricity, large artificial ice farms popped up closer to their customer base. By WW2 the industry had collapsed and been replaced by home refrigeration. This is similar to the computer story but the replacement job of manufacturing refrigerators never grew larger than the globe spanning, labor intensive ice trade.

This framework of analogies is useful for projecting possible futures for different cognitive labor industries. It helps concretize two important variables: whether the tech will be a productivity enhancing tool for human labor or an automating substitute and whether the elasticity of demand for the outputs of the augmented production process is high enough to raise the quantity of labor input.

All of these analogies are relevant to the future of cognitive labor. In my particular research and writing corner of cognitive labor, the most optimistic story is the printing press. The current biggest value adds for this work: literature synthesis, data science, and prose seem certain to be automated by AI. But as with the printing press, automating the highest value parts of a task is not sufficient to decrease the income, influence, or number of people doing it. Google automated much of the research process but it has supported the effusion of thousands of incredible online writers and researchers.

Perhaps this can continue and online writing will become even more rewarding, higher quality, and popular. This requires the demand for writing and research to expand enough to more than offset the increased productivity of each writer already producing it. With the printing press, this meant more people reading more copies of the same work. With the internet, there is already an essentially infinite supply of online writing and research that can accessed and copied for free. Demand is already satiated there, but demand for the highest quality pieces of content is not. In my life, at least, there is room for more Scott Alexander quality writing despite being inundated with lots of content below that threshold. AI may enable a larger industry of people producing writing and research of the highest quality.

If quantity demanded, even for these highest quality pieces of content, does not increase enough then we will see writing and research become more like mechanized farming. An ever smaller number of people using capital investment to satiate the world’s demand. Highly skewed winner-take-all markets on youtube, larger teams in science, and the publishing market may be early signs of this dynamic.

The third and fourth possibilities, where so many tasks in a particular job are automated that the entire job is replaced, seems most salient for programmers and paralegals. It would be a poetic future where programmer, the job title which rose to replace the now archaic “computer,” also becomes an archaic term. This could happen if LLMs fully automate writing programs to fulfill a given goal and humans graduate to long term planning and coordination of these programmers.

The demand for the software products that these planners could make in cooperation with LLMs seems highly elastic, so most forms of cognitive labor seem safe from the ice trade scenario for this reason. Perhaps automating something like a secretary would not increase not increase the quantity demanded for the products they help produce enough to offset that decline in employment

When predicting the impact of AI on a given set of skills most people only focus on the left-hand axis of the 2x2 table above. But differences in the top axis can flip the sign of expected impact. If writing and research will get enhanced productivity from AI tools, they can still be bad skills to invest in if all of the returns to this productivity will go to a tiny group of winners in the market for ideas. If your current job will be fully automated and replaced by an AI, it can still turn out well if you can get into a rapidly expanding replacement industry.

The top axis also has the more relevant question for the future of cognitive labor, at least the type I do: writing and research. Is there enough latent demand for good writing to accommodate both a 10x more productive Scott Alexander and a troupe of great new writers? Or will Mecha-Scott satiate the world and leave the rest of us practicing our handwriting in 1439?

The whole idea of estimating P(doom) is bad, because nobody can estimate it well and having a bad estimate isn’t useful. One lesson of theoretical cs is that there are functions you cannot approximate well.

I think you are better off thinking in “scenarios”, like the pessimistic scenario is that AI plateaus in the very near future, the doomer scenario is that AI fundamentally outcompetes humanity, a medium/good scenario is that multiple new trillion dollar companies are formed but there is no “singularity”, etc. And then accept that you cannot estimate the likelihood of the different scenarios, but you could still use them as a tool for planning.

Dynomight wrote up some additional analogies...I like your 2x2 framing though.

https://dynomight.net/llms/